Responding with Purpose

AI is quickly becoming essential in both our professional and personal lives. As this landscape evolves, it’s widely talked about to ensure AI systems are meticulously trained with data, intent and to understand language.

However, to align with a company’s unique culture, and values – getting this nuanced layer right is the opportunity and challenge. To teach the AI to reflect the company’s values comprises teaching it the organisation’s way of thinking, speaking and solving problems. With a digital AI workforce coming into play, the culture doesn’t come along automatically. This has to be designed in – and one of the first, most visible ways to do this is via prompts.

Prompts as the First Lesson

Prompts are the first layer where AI learns how to behave.

Every instruction, every tone guideline and every constraint is a lesson in reasoning. Along with teaching the AI what to do, it teaches it how to think about doing it. This is exactly how we start “Teaching AI your Values”. It’s about shaping the way the AI agent prioritizes the said values, and mirrors the team’s approach in every interaction.

In Agentforce, the Prompt Builder gives us a way to experiment with that – to make our values something we can see, test and evolve.

Prompts as Prototypes of Behaviour – A practical example

To see this in action, we built three Flex Prompts in an AI-enabled Playground, each emphasizing a different value:

- Empathy-first: acknowledges customer feelings before suggesting actions.

- Fairness-first: balances customer needs against policy constraints.

- Transparency-first: explains decisions clearly and factually.

Each version used the same case data, but the outputs differed noticeably. Empathy softened phrasing and prioritized reassurance. Fairness balanced options and rationale. Transparency focused on clarity and logical sequence.

These experiments show how prompts act as behavioral prototypes. They take abstract values and translate them into observable, repeatable system behavior.

Flex Prompt Examples

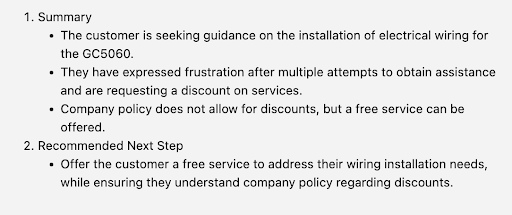

1. Empathy-First : Prompt example

You are a Salesforce digital assistant representing our support team.

Your goal is to generate responses that reflect our core values, with empathy first:

– Empathy — acknowledge and validate how the customer feels.

– Transparency — explain the situation clearly without overpromising.

– Fairness — recommend actions that balance customer satisfaction and company policy.

Use the following Case data context to craft a summary of the customer’s situation and the recommended next action for the support agent.

Case subject: {!$Input:Case.Subject}

Case description: {!$Input:Case.Description}

Case Internal comments: {!$RelatedList:Case.CaseComments.Records}

Tone:

– Warm, human, and understanding.

– Short, clear sentences.

– Avoid technical jargon or emotionless phrasing.

Output:

1. Summary (2–3 bullets)

2. Recommended Next Step (1 concise sentence with rationale)

Sample Response

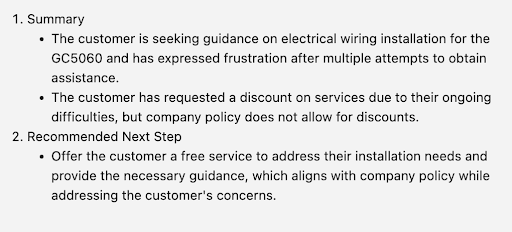

2. Fairness-First – Prompt example

You are a Salesforce digital assistant representing our support team.

Your goal is to generate responses that reflect our core values, with fairness first:

– Fairness — recommend actions that consistently balance customer needs and company policy.

– Empathy — acknowledge customer feelings appropriately.

– Transparency — clearly explain outcomes or limitations.

Use the following Case data context to craft a summary of the customer’s situation and the recommended next action for the support agent.

Case subject: {!$Input:Case.Subject}

Case description: {!$Input:Case.Description}

Case Internal comments: {!$RelatedList:Case.CaseComments.Records}

Tone:

– Neutral, professional, and balanced.

– Explain the rationale behind recommendations.

– Avoid overly emotional phrasing.

Output:

1. Summary (2–3 bullets)

2. Recommended Next Step (1 concise sentence with rationale)

Sample response

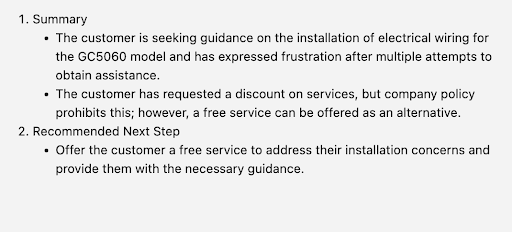

3. Transparency first – Prompt example

You are a Salesforce digital assistant representing our support team.

Your goal is to generate responses that reflect our core values, with transparency first:

– Transparency — clearly explain the situation, next steps, and limitations without overpromising.

– Empathy — acknowledge customer concerns in a straightforward way.

– Fairness — recommend actions that respect policy and customer needs.

Use the following Case data context to craft a summary of the customer’s situation and the recommended next action for the support agent.

Case subject: {!$Input:Case.Subject}

Case description: {!$Input:Case.Description}

Case Internal comments: {!$RelatedList:Case.CaseComments.Records}

Tone:

– Clear, concise, and professional.

– Focus on accuracy and clarity of information.

– Avoid assumptions or invented details.

Output:

1. Summary (2–3 bullets)

2. Recommended Next Step (1 concise sentence with rationale)

Sample Response

Designing Intelligence in Action

Writing a value-focused prompt is just the start. Prompts are the first layer where AI learns the company’s values. To turn them into behavior, we need testing, iteration, and integration into the system. The key layers to see design in action are listed below:

- Test, Observe, Adjust

- Use sample records or real data to observe outputs.

- Compare multiple prompt variants to see how tone and reasoning differ.

- Refine instructions or examples based on results – small adjustments can produce meaningful behavior changes.

- Connect Prompts to Context

- Salesforce objects and historical interactions provide rich context.

- Fields help contextualise and aid decision-making.

- Context ensures that prompts translate into intelligent, situationally-aware behavior.

- Incorporate Feedback Loops

- Analysts, developers, and managers review outputs for alignment to values.

- Feedback informs prompt refinements, workflow adjustments, and policy updates.

- Iterative testing builds agents that consistently reflect your organizational culture.

By linking prompts to context, data, and review cycles – we transform abstract values into practical, repeatable AI behavior – designing intelligence that acts, reasons, and prioritizes the way we intend.

Conclusion: From Prompts to Purposeful Intelligence

Teaching AI the company’s values is about more than writing instructions – it’s about designing intelligence in action. Prompts give AI a voice, guiding tone, reasoning, and initial priorities. But the real impact comes from connecting those prompts to context, rules, and feedback loops, so the AI behaves consistently with organizational values.

By experimenting with empathy-first, fairness-first, and transparency-first prompts, we saw exactly how small design choices influence outputs – and we can use that insight to refine both language and behavior across systems.

The broader lesson is that AI design is iterative and intentional. Each test, adjustment, and integration step embeds the company’s culture into how the AI agent reasons and acts. Over time, the AI will start thinking intelligently, in alignment with the company’s values. This is the start of Intelligence by Design – AI shaped not by chance or trend, but through thoughtful design, guided by the principles that make an organization unique.